Putin and Musk may be right: Industry experts say it is theoretically impossible to make sure AI won’t harm humans

12/04/2017 / By JD Heyes

As researchers continue to make advances in artificial intelligence, or AI, the louder and more frequent the calls are becoming to not simply tread lightly with the technology but to abandon it completely.

And why? Because many fear that the technology will someday turn on humans and wipe all of us out.

One of the most vocal in promoting this theory has been entrepreneur and Tesla founder Elon Musk who, with scientist Stephen Hawking, wrote an open letter to the tech industry in 2015 warning that AI would not only put tens of millions of people out of work, but likely will make self-learning robots far more intelligent than humans in the long run. And when that happens, they say, we’ll be at risk of extinction from killer robots.

This warning was recently repeated, albeit in a somewhat less dramatic way, by Russian President Vladimir Putin, who told a million Russian school children via satellite video link in September that AI “is the future” for “all of mankind.”

“Whoever becomes the leader in this sphere will become ruler of the world,” he said.

Turns out that these men may be exactly right.

In an essay published on the website of the IMDEA Networks Institute — a networking research organization with a multinational research team engaged in cutting-edge fundamental scientific research — “containing” a “superintelligence” AI system is “theoretically impossible,” meaning it could not be ‘taught’ or ‘forced’ to protect or defend humans.

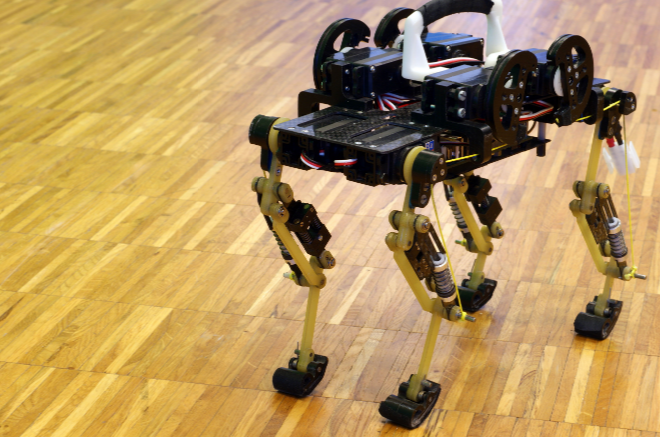

“Machines that ‘learn’ and make decisions on their own are proliferating in our daily lives via social networks and smartphones, and experts are already thinking about how we can engineer them so that they don’t go rogue,” the essay says.

Some of the suggestions made thus far include “training” self-learning machines to ignore specific kinds of data and information that might allow them to learn racism or sexism and coding them so that they demonstrate values of empathy and respect.

“But according to some new work from researchers at the Universidad Autónoma de Madrid, as well as other schools in Spain, the US, and Australia, once an AI becomes ‘superintelligent’ —think Ex Machina — it will be impossible to contain it,” the essay says.

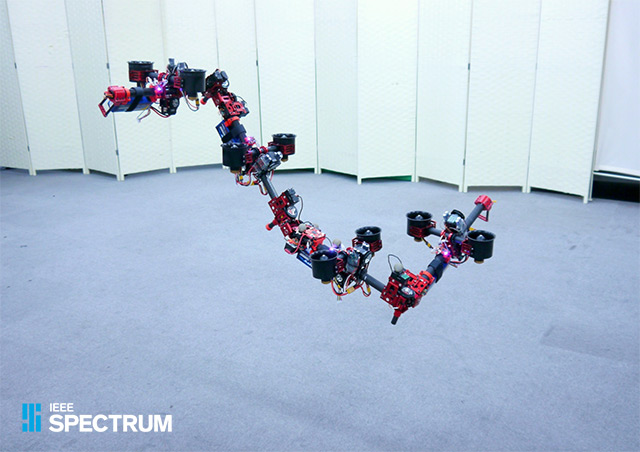

What would a “superintelligence” program look like? It likely would be one that contained every other program that exists, complete with the ability to obtain new programs as they become available. (Related: Elon Musk warns AI could wipe out humanity.)

Then again, since AI is self-learning, at this stage the “superintelligence” robot, computer or device would be able to produce its own coding and programming, and in real time. That’s how fast these kinds of machines would learn — then produce and react.

Right now the thought that machines can rule the world is nothing more than a theory. But it’s one that has some very smart people very concerned about where AI will take us, and not too long in the future.

Putin may not have alluded to military applications for AI in his chat with Russian school children, but don’t think for a moment he isn’t thinking about how to adapt the technology to existing (and future) weapons systems.

Rather than just building “killer” robots that can learn at an accelerated speed, imagine standard weapons systems that think and adapt on the fly.

Take ICBMs, for instance. At present, missiles have some ability to adjust in flight, thanks to satellite-fed data links. That makes them more difficult for anti-missile systems to hit.

But what if incoming ICBMs could spot threats and adjust to them in real time, without human intervention? They would become far more deadly and accurate – and far harder to defend against.

“AI research is likely to deliver a revolution in military technology on par with the invention of aircraft and nuclear weapons,” Gregory Allen wrote for CNN. “In other words, Musk is correct that each country is pursuing AI superiority and that this pursuit brings new risks.”

J. D. Heyes is a senior correspondent for the Natural News network of sites and editor of The National Sentinel.

Sources include:

Tagged Under: artificial intelligence, Collapse, cyber war, extinction, robotics, Terminator robots